Heap Internals & Exploitation

Deep reference on glibc ptmalloc2: chunk layout, free bins, tcache, safe-linking, UAF, double-free, heap overflow, and modern exploitation techniques.

The heap is the primary arena for dynamic memory in C and C++ programs. Most exploitation techniques at the heap layer abuse the allocator's unconditional trust of its own metadata — a trust you can violate through out-of-bounds writes, use-after-free, or double-free bugs.

These notes focus on glibc ptmalloc2, the allocator on most Linux systems. Concepts carry over to jemalloc and tcmalloc with varying details.

Quick Reference

1. Chunk Anatomy

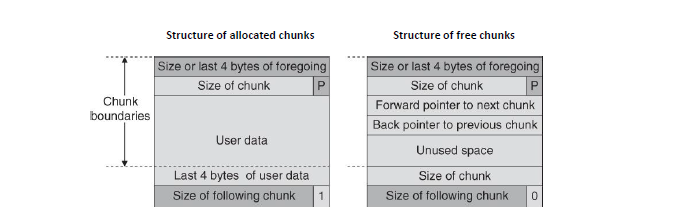

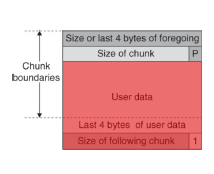

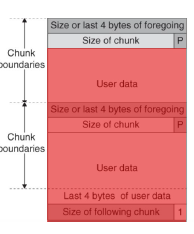

Every malloc call returns a pointer into the user region of a chunk. Directly before that pointer sits the chunk header managed — and trusted — by the allocator.

In-use chunk layout

(end of previous chunk's user data)

┌─────────────────────────────────────┐

│ prev_size (8 B) │ ← reused as user data when prev is in-use

│ size | flags (8 B) │ ← size of THIS chunk; 3 flags in low bits

├─────────────────────────────────────┤ ← malloc() returns pointer HERE ↓

│ │

│ user data (size − 16 B) │

│ │

└─────────────────────────────────────┘

(next chunk's prev_size starts here)

Free chunk — additional fields

┌─────────────────────────────────────┐

│ prev_size (8 B) │

│ size | flags (8 B) │

├─────────────────────────────────────┤

│ fd → next free chunk │

│ bk → prev free chunk │

│ fd_nextsize (large bin only) │

│ bk_nextsize (large bin only) │

└─────────────────────────────────────┘

Size flags (low 3 bits of size)

size field sits immediately before user data, even a one-byte overflow (e.g., writing a null terminator past a string buffer) can flip the PREV_INUSE bit of the next chunk, tricking the allocator into a dangerous backward consolidation.2. Free Bins

After free(p), glibc places the chunk into one of five bin structures — checked in order during the next malloc:

- Per-thread, no lock

- 64 bins for sizes 24–1032 B (16-B steps)

- Max 7 entries per bin (count enforced)

- Singly linked — only

fd - Checked first on both alloc and free

- Sizes ≤ 64 B (default); up to 80 B

- 10 singly-linked LIFO bins

- No coalescing (speed trade-off)

- Consolidated only on

malloc_consolidate()

- Single circular doubly-linked list

- First stop for non-fast freed chunks

- Chunks sorted into small/large on the next malloc scan

- 62 bins, sizes 16–504 B

- Doubly-linked FIFO (exact-size match)

- Chunks coalesce with neighbours on free

- 63 bins for sizes ≥ 512 B

- Doubly linked + size-sorted (best-fit)

- Extra

fd_nextsize / bk_nextsizeskip-list chains

- Last resort — extend heap via

sbrk/mmap - Always at the high end of the arena

- No prior free chunk to coalesce backwards with

malloc lookup order

malloc(n):

1. tcache[size_class] → O(1) thread-local pop (≥ 2.26)

2. fastbin[size_class] → O(1) if size ≤ fast threshold

3. small bin[size_class] → exact fit, doubly-linked pop

4. unsorted bin → linear scan; splits oversized chunks

5. large bin → best-fit in a size-sorted list

6. top chunk → carve from wilderness, or sbrk/mmap

3. Tcache Deep Dive (glibc ≥ 2.26)

Tcache is a per-thread, lock-free singly-linked cache that makes small allocations near-free in latency. Its initial design lacked almost all integrity checks — making it the dominant exploitation target for several years.

Internal structures

typedef struct tcache_entry {

struct tcache_entry *next; /* singly linked */

struct tcache_perthread_struct *key; /* 2.29+: double-free canary */

} tcache_entry;

typedef struct tcache_perthread_struct {

uint16_t counts[TCACHE_MAX_BINS]; /* entries per bin (max 7) */

tcache_entry *entries[TCACHE_MAX_BINS]; /* head of each bin */

} tcache_perthread_struct;The tcache_perthread_struct itself lives at the very start of the heap, allocated by malloc during thread initialisation. Corrupting it gives complete control over all future small allocations.

put / get (simplified)

static void tcache_put(mchunkptr chunk, size_t tc_idx) {

tcache_entry *e = chunk2mem(chunk);

e->key = tcache; /* 2.29+: tag for double-free check */

e->next = PROTECT_PTR(&e->next, /* 2.32+: safe-linking */

tcache->entries[tc_idx]);

tcache->entries[tc_idx] = e;

++(tcache->counts[tc_idx]);

}

static void *tcache_get(size_t tc_idx) {

tcache_entry *e = tcache->entries[tc_idx];

tcache->entries[tc_idx] = REVEAL_PTR(e->next);

--(tcache->counts[tc_idx]);

e->key = NULL;

return (void *)e;

}Safe-linking (glibc ≥ 2.32)

The next pointer stored in tcache (and fastbin) entries is XOR-mangled with a secret derived from its own storage address:

#define PROTECT_PTR(pos, ptr) \

((__typeof (ptr))((((size_t)(pos)) >> 12) ^ ((size_t)(ptr))))

#define REVEAL_PTR(ptr) PROTECT_PTR(&ptr, ptr)The slot address pos is right-shifted 12 bits (drops the page-offset) then XOR-ed with the pointer value. Forging a valid mangled pointer requires knowing the heap address of the slot — which demands a prior heap leak.

next field of a freed chunk through a dangling pointer or OOB read — this leaks a mangled pointer. XOR it with slot_addr >> 12 to recover the raw heap address, then compute the correct mangled value for your forged pointer.4. Allocator Mitigations

tcache_entry.key is set to the tcache header pointer on free; if the same pattern is seen on re-entry, abort() is called.next pointers are XOR'd with a slot-derived secret. Forging a pointer requires a heap address leak.tcache->counts[i] is incremented on put and decremented on get. Underflow or overflow triggers abort.tcache_get the returned pointer must be MIN_CHUNK_SIZE-aligned. Misalignment aborts.fd->bk == chunk and bk->fd == chunk before relinking. Classic write-what-where now aborts unless you control both pointers.5. Bug Classes

Uninitialized use

Reading fields of a freshly malloc'd object before writing them. Heap memory is not zeroed by malloc.

typedef struct { int len; char *buf; } Msg;

Msg *m = malloc(sizeof *m);

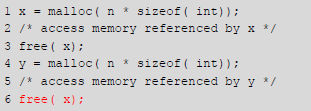

printf("%d\n", m->len); // UB: reading stale bytes from a prior allocationcalloc or explicit memset when zero-initialization matters.Unchecked malloc return

char *p = malloc(n);

memcpy(p, src, n); // segfault — or null-pointer write — if p == NULLOn modern Linux, mmap_min_addr prevents null-page mapping in userspace, so null-deref is typically a crash. In the kernel or embedded contexts it may be exploitable.

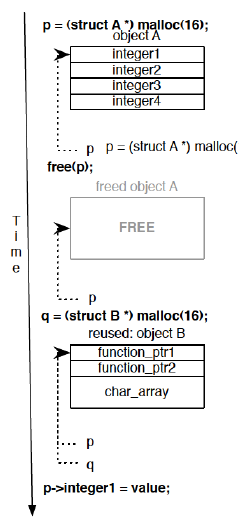

Use-after-free (UAF)

char *a = malloc(16);

free(a);

a[2] = 0x41; // UAF write — 'a' is now on the tcache free listTimeline:

t0 malloc(16) → 0xADDR allocate

t1 use(0xADDR)

t2 free(0xADDR) chunk goes to tcache/fastbin

t3 malloc(16) → 0xADDR SAME region returned to a second caller

t4 a[2] = 0x41 clobbers the second caller's object

Stack variant — use-after-return:

char *bad(void) {

char local[16];

return local; // pointer to a stack frame that no longer exists

}Type confusion — free object of type A, reallocate as type B at the same address, use A's dangling pointer to reach B's fields.

Pointer hijack — if the freed object contained a function pointer or vtable, overwrite it via the dangling pointer.

Metadata leak — freed chunk's fd/bk now hold heap (or libc) addresses; read them through the dangling pointer for a leak.

Double free

free(p);

if (error_condition)

free(p); // double free — corrupts tcache / fastbin listIn tcache before glibc 2.29 (no key check), this directly creates a cycle:

tcache bin (size class): p → p → p → … (self-referential)

malloc(sz) → p (pop first entry)

malloc(sz) → p (same address again — two owners of one allocation)

NULL — free(NULL) is defined as a no-op. Adopt clear ownership semantics so exactly one code path frees each allocation.Memory leak

for (int i = 0; i < N; i++) {

char *buf = malloc(1 << 20); // 1 MiB

process(buf);

/* forgot: free(buf) */

}

// RSS grows unbounded; heap becomes fragmentedLeaks rarely cause direct exploitability but degrade availability (OOM) and may let an attacker indirectly shape heap layout for later exploitation.

Heap overflow

Non-exploitable layout — overflow lands in the top chunk:

char *buf = malloc(1024);

strcpy(buf, argv[1]); // overflow goes into wilderness; no free-list corruption

Exploitable layout — two adjacent allocations:

char *buf = malloc(1024);

char *buf2 = malloc(1024); // typically placed immediately after buf

strcpy(buf, argv[1]); // overflow lands on buf2's chunk header

free(buf2); // allocator consults the corrupted headerWhat even a single-byte overflow can achieve:

- Flip

PREV_INUSE→ trigger false backward consolidation - Overwrite

size→ misalign future allocations - Plant fake

fd/bk→ write-what-where on unlink (older glibc)

6. Exploitation Techniques

Classic Unlink / Frontlink

Precondition: Heap overflow into a free chunk's fd/bk fields.

Forge Y.fd = &WRITEVAL

Forge Y.bk = &TARGET − 0x10 (so bk→fd lands on TARGET)

unlink(Y):

fd→bk = bk → *(TARGET) = WRITEVAL ← arbitrary write

bk→fd = fd → *(WRITEVAL+8) = &TARGET-8

A write-what-where primitive. Patched in glibc 2.3.4 with the consistency check fd→bk == chunk && bk→fd == chunk.

P that holds the address of the fake chunk, set fd = P − 0x18, bk = P − 0x10. Then fd→bk and bk→fd both resolve to the fake chunk, passing the check.Fastbin Corruption

Precondition: UAF or double-free on a fastbin-sized chunk.

Step 1 free(A) → fastbin: [ A → NULL ]

Step 2 free(A) again → fastbin: [ A → A ] (old glibc: only checks top-of-bin ≠ A)

Step 3 malloc(sz) → returns A; write A.fd = FAKE − 8

Step 4 malloc(sz) → returns A again (drain)

Step 5 malloc(sz) → returns FAKE (allocator treats FAKE as a valid chunk)

Use step 5 to write into FAKE — typically __malloc_hook, __free_hook, or a GOT entry.

Tcache Dup / Poisoning

Before glibc 2.32 (no safe-linking):

free(A) → tcache: [ A ]

free(A) → tcache: [ A → A ]

malloc(sz) → A write A->next = &TARGET

malloc(sz) → A (drain the duplicate)

malloc(sz) → TARGET allocator hands us the target address as "fresh" memory

With safe-linking (≥ 2.32), step 3 requires a mangled pointer:

size_t slot = (size_t)&A->next;

A->next = (tcache_entry *)((slot >> 12) ^ (size_t)&TARGET);Which requires &A->next — obtained from a heap address leak.

House of Force (pre-glibc 2.29)

Precondition: Overflow into the top chunk's size field.

1. Overwrite top->size = 0xffffffffffffffff

2. malloc(req) where req wraps 64-bit arithmetic to place the new

top pointer at TARGET − 2*sizeof(size_t)

3. The following malloc(any) returns TARGET — arbitrary allocation

Mitigated in glibc 2.29: top chunk size is sanity-checked against arena bounds.

House of Einherjar

Precondition: Off-by-one null-byte overflow into the low byte of the next chunk's size (clearing PREV_INUSE) and the ability to forge a fake free chunk somewhere in memory.

1. Plant fake chunk header at address FAKE

2. Off-by-one: clear PREV_INUSE of next chunk; set its prev_size = distance to FAKE

3. free(next chunk) → backward consolidate lands at FAKE

4. malloc(N) → returns chunk overlapping FAKE + live adjacent object

(overlap gives read/write over the adjacent object)

Heap Spray

Allocate many same-size objects to deterministically fill bins and predict heap layout:

void *spray[256];

for (int i = 0; i < 256; i++)

spray[i] = malloc(sz); // fill tcache + fastbins

for (int i = 0; i < 256; i++)

free(spray[i]); // drain — next alloc is at a known addressTurns a probabilistic exploit into a reliable one. Essential when ASLR is active.

7. Tooling

valgrind --leak-check=full ./bin-fsanitize=address,undefined -gheap / bins / vis_heap_chunksmalloc_stats(); /* writes to stderr */LD_PRELOAD=libdislocator.so ./binpwndbg> heapinfoEssential pwndbg commands

heap walk all chunks in the main arena

bins show fastbins, unsorted, small, large bins

tcachebins dump per-thread tcache entries + counts

vis_heap_chunks N color-coded visual map of N chunks

find_fake_fast ADDR find memory that looks like a valid fastbin chunk

malloc_chunk ADDR parse chunk header at ADDR

try_free ADDR simulate a free() call to check what glibc would do

Attack Checklist

- Identify the primitive — overflow / UAF / double-free / type confusion

- Leak addresses — read a freed chunk's fd/bk (unsorted bin → libc leak) or a tcache pointer (heap leak)

- Shape the heap — spray/drain to control chunk adjacency and bin state

- Corrupt a free-list pointer — redirect the next allocation to a target region (GOT, hook, vtable)

- Write your payload — overwrite

__free_hook,__malloc_hook, a GOT entry, or a C++ vtable pointer - Trigger — call the overwritten hook/function to gain control flow